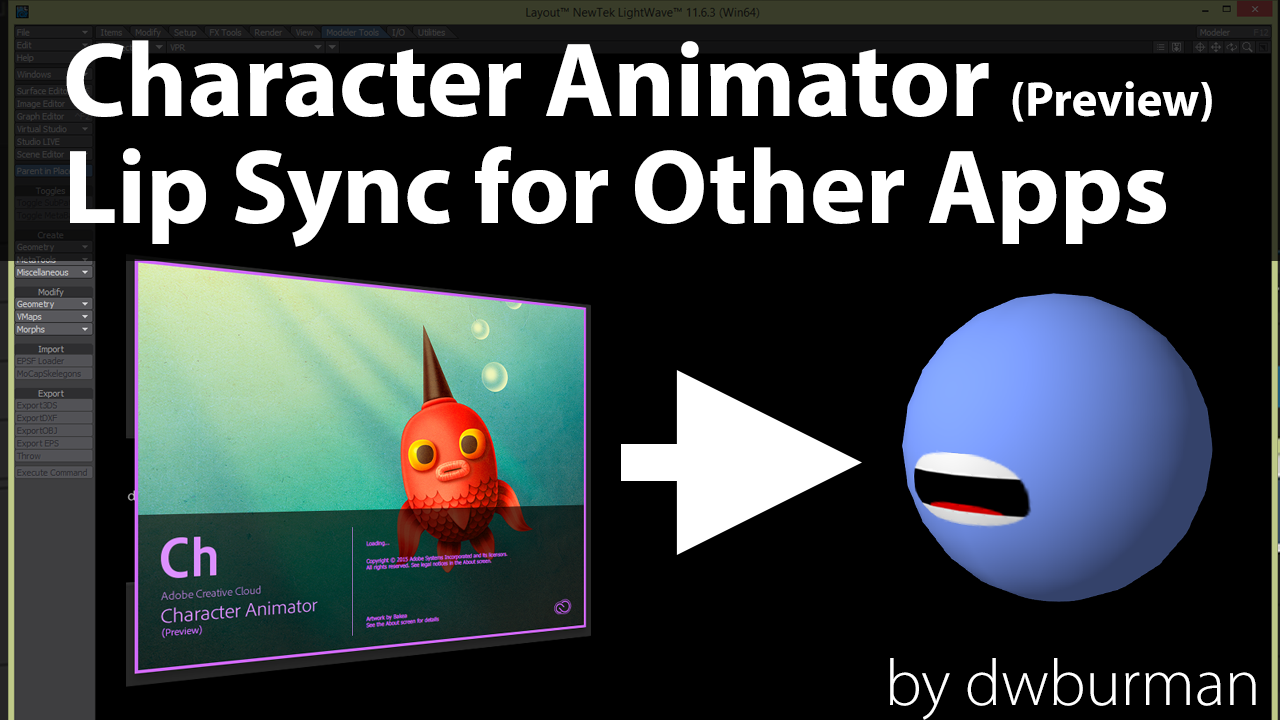

Using Adobe Character Animator for Lip Sync

Adobe Creative Cloud subscribers got a new app with After Effects when CC2015 was released. It’s a small, still-in-development app called Character Animator (preview). It’s designed to use a webcam to track video and audio and a few other optional inputs to do simple, automated character animation.

DWBurman has been experimenting with focusing on using it primarily to do automated lip syncing. By reducing the artwork to just the mouth, and turning off the head tracking, you can export a png sequence that you can use as a texture on your 3D object in LightWave 3D or in any other application that uses frame sequences.

Learn the steps of the workflow by watching the tutorial on his YouTube channel.

This is a two-part series (so far) and the next tutorial will be more LightWave-centric as it will show a workflow for using Character Animator to control Morph Maps in LightWave. That video is scheduled to be released Sept 13.

Adobe Creative Cloud subscribers got a new app with After Effects when CC2015 was released. It’s a small, still-in-development app called Character Animator (preview). It’s designed to use a webcam to track video and audio and a few other optional inputs to do simple, automated character animation.

DWBurman has been experimenting with focusing on using it primarily to do automated lip syncing. By reducing the artwork to just the mouth, and turning off the head tracking, you can export a png sequence that you can use as a texture on your 3D object in LightWave 3D or in any other application that uses frame sequences.

Learn the steps of the workflow by watching the tutorial on his YouTube channel.

This is a two-part series (so far) and the next tutorial will be more LightWave-centric as it will show a workflow for using Character Animator to control Morph Maps in LightWave. That video is scheduled to be released Sept 13. « RealFlow 2015 Reel (Previous News)

2 Comments to Using Adobe Character Animator for Lip Sync

Leave a Reply

For Posting a Comment You must be Logged In.

Hi could please tell me how to I Setup voice in animation I just make an animated character to following you instruction but the problem with voice please guide me about that?

I don’t have much experience with lip sync other using Adobe Character Animator.

If you don’t have that, you can try Papagyo. I believe it’s free. For that one you want to try one line at a time. It’s a bit more manual processs but you can get quick at it.

https://my.smithmicro.com/papagayo.html

I’ll also add that audio in lightwave is loaded via the scene editor.